The Evolution and Impact of Generative Pre-trained Transformers (GPT): An In-Depth Analysis

Introduction

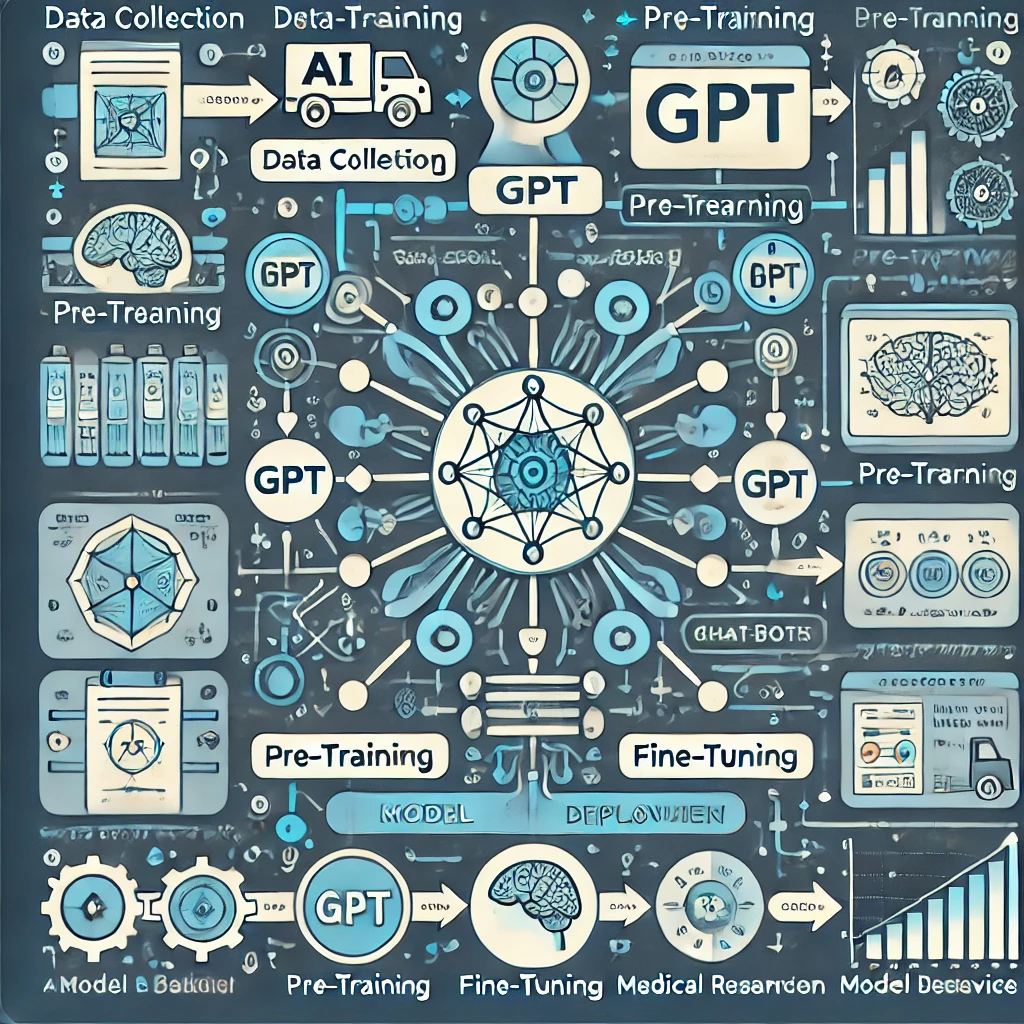

Generative Pre-trained Transformers (GPT) are at the forefront of artificial intelligence, revolutionizing how machines process and generate human-like text. Developed by OpenAI, GPT models leverage deep learning techniques to achieve unprecedented levels of language understanding and generation. These models have transformed industries such as content creation, customer support, and education. This article provides an exhaustive analysis of how GPT models function, their development process, the costs involved, and real-world applications.

How GPT Models Work

GPT models are built on the transformer architecture, a neural network design that relies on self-attention mechanisms to process and generate text. This architecture allows for better context retention and accurate predictions, making GPT models superior to traditional natural language processing (NLP) approaches.

Core Components of GPT Models

- Self-Attention Mechanism: Enables the model to assign different importance levels to words in a sentence, ensuring more coherent outputs.

- Layered Processing: Deep neural networks with multiple layers allow for complex pattern recognition and context understanding.

- Pre-training and Fine-tuning:

- Pre-training: GPT is trained on massive datasets comprising text from books, articles, and websites, helping it learn language structures and context.

- Fine-tuning: After pre-training, the model is optimized for specific applications, such as customer support chatbots or medical diagnosis tools.

Development Process of GPT Models

The development of GPT models involves several intricate steps, each requiring significant computational power and expertise.

1. Data Collection and Processing

The training data is sourced from diverse text corpora, including news articles, academic papers, and online discussions. This extensive dataset enables GPT models to learn from a wide range of writing styles and subjects.

2. Training the Model

Training a GPT model is a computationally intensive task that requires:

- High-Performance GPUs: Cutting-edge graphical processing units (GPUs) run complex algorithms for weeks or months.

- Extensive Cloud Infrastructure: Cloud-based computing platforms, such as those offered by Microsoft Azure or Google Cloud, provide the necessary resources.

3. Optimization and Testing

Once trained, the model is optimized to ensure efficient performance and accuracy. Testing involves:

- Evaluating response coherence

- Identifying and mitigating biases

- Ensuring factual correctness

Cost of Developing GPT Models

Developing and maintaining a GPT model incurs substantial costs. These expenses can be broadly categorized into:

1. Hardware Costs

- A high-end GPT model requires thousands of GPUs.

- Power consumption and cooling systems add to operational costs.

2. Data Acquisition and Storage

- Collecting and processing vast amounts of text data involves considerable storage and management expenses.

3. Human Resource Expenses

- AI researchers and engineers earn high salaries due to their specialized expertise.

4. Energy Consumption

- Running large AI models demands significant electricity usage, leading to high energy bills.

5. Software and Maintenance

- Continuous updates and fine-tuning require ongoing investment in software development and debugging.

Real-World Applications of GPT Models

GPT models are already transforming numerous industries. Below are key applications:

1. Content Creation and Marketing

- Automating blog writing, ad copy, and social media posts.

- Enhancing creativity in storytelling and scriptwriting.

- Streamlining SEO-optimized content production for websites.

2. Customer Service Automation

- Chatbots providing 24/7 support to customers with personalized responses.

- Reducing operational costs for businesses by minimizing human intervention.

- Handling multilingual queries efficiently for global businesses.

3. Education and Learning Assistance

- AI-powered tutoring systems that offer personalized lessons and feedback.

- Automated content summarization to help students grasp key concepts quickly.

- Enhancing language learning through AI-driven conversation simulations.

4. Healthcare and Medical Research

- Assisting doctors in diagnosing diseases based on medical literature analysis.

- Generating summaries of medical studies for quick reference.

- Developing AI-powered diagnostic chatbots for patient self-assessment.

5. Translation and Multilingual Communication

- Breaking language barriers by providing accurate translations in real time.

- Enabling businesses to expand globally with multilingual AI assistants.

- Offering instant speech-to-text and text-to-speech AI services.

Economic and Ethical Considerations

While GPT models offer immense potential, they also raise significant ethical and economic concerns.

1. Job Displacement vs. Job Creation

- AI is automating repetitive tasks, reducing the need for human intervention in some roles.

- However, AI-driven industries are creating new job opportunities in AI research, development, and oversight.

- Employees must upskill to work alongside AI rather than be replaced by it.

2. Bias and Ethical Risks

- Training data can inadvertently introduce biases, leading to problematic AI responses.

- Developers must implement robust mitigation strategies to ensure fairness and accuracy.

- Continuous monitoring is necessary to prevent AI-generated misinformation.

3. Energy Consumption and Environmental Impact

- AI models require vast amounts of energy, contributing to environmental concerns.

- Future AI development must focus on improving energy efficiency.

- Companies are researching ways to integrate green computing practices into AI training.

The Future of GPT and AI Development

GPT technology is continuously evolving, with upcoming versions expected to feature:

- Improved Accuracy: Enhanced fact-checking mechanisms to reduce misinformation.

- Better Multimodal Capabilities: Integrating text, image, and video processing for more comprehensive AI applications.

- Higher Efficiency: Reducing computational power requirements while maintaining performance.

- Enhanced Personalization: More adaptive AI responses tailored to user preferences and past interactions.

- Greater Interactivity: Allowing real-time voice-based AI interactions for seamless human-machine communication.

Conclusion

Generative Pre-trained Transformers have revolutionized AI-driven applications across industries, making them invaluable tools for businesses and individuals alike. While development costs are substantial, the benefits in automation, efficiency, and accessibility make these investments worthwhile. As AI continues to advance, ethical considerations and sustainability must remain at the forefront to ensure responsible innovatiON.